Hi, My name is Lee Cuong, welcome to my blog!

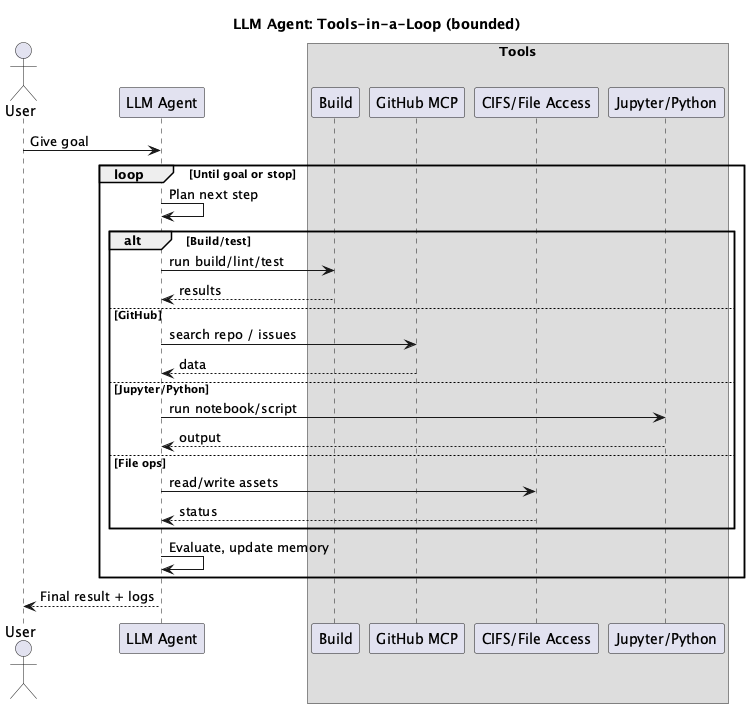

I’ve seen many people argue about the word “agent”. Some love it, some roll their eyes. Recently I read a piece that made it very clear: an LLM agent runs tools in a loop to achieve a goal (see Simon Willison’s explanation). Simple. Not a little human in the computer. Not a new kind of intelligence. Just a language model plus a toolbox, running again and again until it reaches the target or it stops.

This matches what I’ve been doing for the last two months with what I call VIBE coding (you can read it as vibe coding too). I built a few small web apps. Nothing big. But the process felt very different. I wasn’t sitting alone with a blank editor. I was sitting with a helper that could reach for tools on demand.

Here’s how it feels in real life:

- I give a goal in plain language. For example: “Make a tiny Flask app with one page that uploads a PNG and compresses it.”

- The agent picks a tool: search a repo, call a build script, run Python, open a notebook, check a server, or read a config file.

- It reads the result, thinks a bit, and chooses the next tool. Sometimes it tries two or three ideas.

- It loops. Think → try → observe → adjust. Then again. And again.

- When it’s done (or stuck), it shows me the outcome and the logs.

That’s it. It sounds basic, but it works. Because the “loop” is where the learning happens. Humans also learn by trying and checking, right? We make a small move, we see what happened, we correct. The agent does the same with tools.

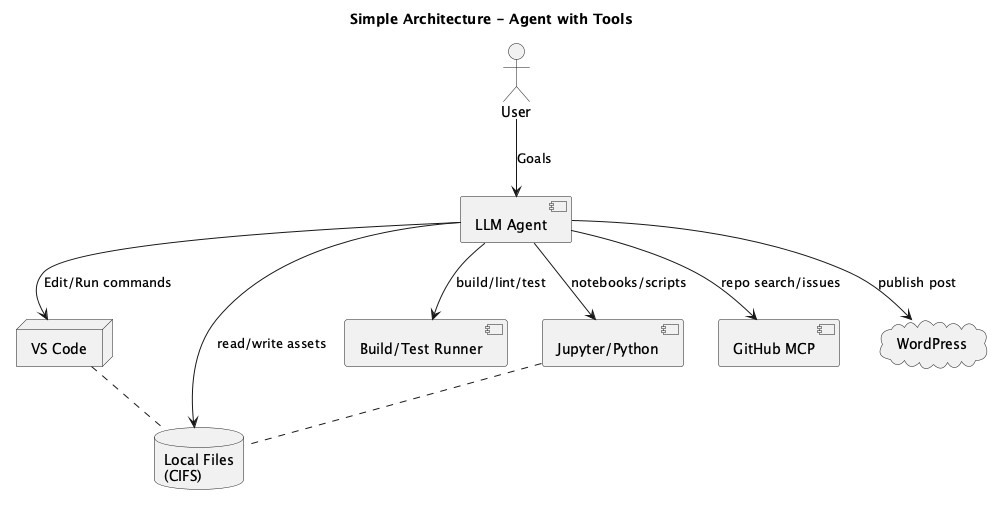

My setup in VS Code

If you open VS Code and look at the tools panel (depending on your setup), you’ll see how this connects. My agent can use:

- Build and test tools: run a build, lint, or a quick unit test.

- Extensions and MCP servers: GitHub MCP for repo queries and issues; CIFS/file share access for local files; and others that help with automation checks.

- Jupyter and Python: run a notebook, test a function, or print a quick chart.

- Local scripts: tiny helpers for converting images, checking ports, or validating JSON.

From my understanding, an “agent” is just a good manager of these tools. It doesn’t know everything. It just speaks clearly to tools, reads the outputs, and keeps going until the goal is met—or it stops because the loop is bounded. If you’ve seen tool-use/function-calling demos from Claude or OpenAI, it’s the same idea in practice (Anthropic’s docs; OpenAI’s 2023 function-calling update).

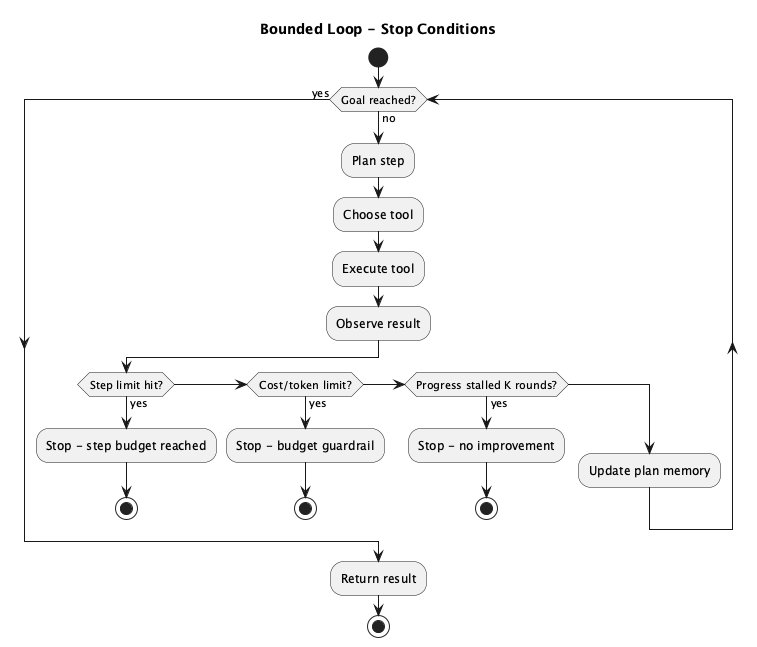

Bounded loops keep us safe

On social media, I saw people warn about infinite loops and token waste. They’re right. A useful agent has guardrails:

- It stops after N steps or M minutes.

- It stops if cost or token count is too high.

- It stops if progress stalls for K rounds.

- It logs every action so I can review and learn.

When a loop is bounded like this, we control the risk. The agent does not wander forever. It plays within a fence. This matters when you pay per token or when you have sensitive data. Multi‑agent frameworks like AutoGen also show why supervision and limits are healthy for real work (paper).

@startuml

title Bounded Loop – Stop Conditions

start

while (Goal reached?) is (no)

:Plan step;

:Choose tool;

:Execute tool;

:Observe result;

if (Step limit hit?) then (yes)

:Stop – step budget reached;

stop

elseif (Cost/token limit?) then (yes)

:Stop – budget guardrail;

stop

elseif (Progress stalled K rounds?) then (yes)

:Stop – no improvement;

stop

else

:Update plan memory;

endif

endwhile (yes)

:Return result;

stop

@endumlWhat I built in two months

I’ll keep it honest: I didn’t ship a unicorn. In the last three months, I shipped small, useful things:

- A tiny web app to resize and compress images for my posts.

- A Lucky Draw app for my master class at VGU so we don’t have to fight for team members during group work.

- A fun Tarot card reader app that picks random cards and shows meanings.

- A Python script that reads a Markdown file and turns it into a WordPress post.

- A few helper scripts to automate image conversion and upload to WordPress.

These are not “AI projects”. They are regular projects made faster because an agent could grab the right tool at the right time. For example, when I forgot the exact flags for an image converter, the agent tried a few, read the errors, corrected the command, and moved on. No drama. No deep magic. Just calm progress (well, as long as you gave it enough context constantly). If you want a more structured approach to loops, the LangGraph community talks about graph‑based control for agents (docs).

A down-to-earth definition

So let me rewrite it in my own words, in friendly English that any reader can carry:

- An agent is a language model that can call tools.

- It plans a step, calls a tool, reads the result, and plans the next step.

- It repeats the loop until it reaches the goal, or it stops based on rules.

- The power comes from the tools and the loop, not from some secret brain.

- The benefit is speed, focus, and less context switching for me and you.

Now it sounds like a human, imagine a man with a toolbox, right? Now he can be a handyman. That’s the vibe.

Why this clicks with VIBE/vibe coding

Vibe coding for me means working with feel, not only with a strict plan. It is small steps, constant feedback, and listening to the code. Agents fit this style because they are patient and good at the boring parts:

- They remember small details across steps in a single task.

- They test small changes quickly.

- They don’t get annoyed when they have to try again.

In Vietnamese we might say “không vội dc đâu”; do it gently. That’s how these loops feel. A soft, steady rhythm.

Tools I actually use

To be concrete, here are tools that helped me most:

- GitHub MCP server to search repos, read issues, and draft PR descriptions. The Model Context Protocol (MCP) is becoming the standard way to connect tools to LLMs (docs).

- Build/lint/test/typecheck runners, often called by the agent during a loop.

- File access for reading and writing local assets.

- Jupyter and Python for quick experiments and data checks.

- Simple shell scripts for image processing before upload to WordPress.

- And of course, a whole bunch of documents such as README, AGENTS.md, Changelogs, design notes, and API references -> all read by the agent as context so it can make better decisions.

You can start with just one or two. The agent doesn’t need a big toolbox to be useful. Psst! The whole Vibe coding thing might be easy at first, but it is a rabbit hole. Once you start, you’ll want to add more tools, more context, and more goals. It’s addictive, but you will learn a lot.

@startuml

title Simple Architecture – Agent with Tools

actor User

component "LLM Agent" as Agent

node "VS Code" as VSC

database "Local Files\n(CIFS)" as Files

component "Build/Test Runner" as Build

component "Jupyter/Python" as Jupyter

component "GitHub MCP" as GH

cloud "WordPress" as WP

User --> Agent : Goals

Agent --> VSC : Edit/Run commands

Agent --> Build : build/lint/test

Agent --> Jupyter : notebooks/scripts

Agent --> GH : repo search/issues

Agent --> Files : read/write assets

Agent --> WP : publish post

VSC .. Files

Jupyter .. Files

@endumlWhen it fails—and how to recover

Sometimes the agent gets stuck:

- Context decay: it forgets something important from the beginning.

- Rotted context: an early wrong assumption stays in memory.

- Poor tool choice: it keeps calling the same tool that cannot solve the problem.

What helps me: shorter goals, tighter bounds, clearer tool descriptions, and a quick reset. “Okay, new plan—start fresh, use only these two tools, maximum five steps.” This resets the vibe and usually works.

Tips: If the agent get stupid after a while and you feel something is off, feel free to stop and drop it and open new chat session and start fresh.

So… why am I happy with AI agents as coders?

Because they let me stay human. I can write, sketch, and think in simple language. The agent translates my goal into tool actions and returns proof. Not ideas—proof. A log. A diff. A running server. That’s all I need most days.

If you are new to this, try a tiny project this week. Pick a task that takes you 30–60 minutes by hand. Give it to an agent with a small set of tools. Set bounds. Watch the loop. You’ll see what I mean: not magic, just useful.

— Lee Cuong

@startuml

title LLM Agent: Tools-in-a-Loop (bounded)

actor User as U

participant "LLM Agent" as A

box "Tools" #DDDDDD

participant Build as Build

participant "GitHub MCP" as GitHub

participant "CIFS/File Access" as CIFS

participant "Jupyter/Python" as Jup

end box

U -> A: Give goal

loop Until goal or stop

A -> A: Plan next step

alt Build/test

A -> Build: run build/lint/test

Build --> A: results

else GitHub

A -> GitHub: search repo / issues

GitHub --> A: data

else Jupyter/Python

A -> Jup: run notebook/script

Jup --> A: output

else File ops

A -> CIFS: read/write assets

CIFS --> A: status

end

A -> A: Evaluate, update memory

end

A --> U: Final result + logs

@endumlReferences

- Simon Willison — “An LLM agent runs tools in a loop to achieve a goal.” Clear definition and practical framing (2025): https://simonwillison.net/2025/Sep/18/agents/

- Anthropic — Claude Tool Use docs. How LLMs call tools/functions safely and repeatedly: https://docs.anthropic.com/en/docs/tool-use

- OpenAI — Function calling update (June 2023). The API pattern that kicked off mainstream tool use: https://openai.com/blog/function-calling-and-other-api-updates

- Microsoft/Carbune et al. — AutoGen (2023). Multi‑agent conversation patterns and supervision: https://arxiv.org/abs/2308.08155

- LangChain — LangGraph (2024). Graph‑based control for agent loops and retries: https://langchain-ai.github.io/langgraph/

- Model Context Protocol (2024). A standard way to connect tools and services to LLMs: https://modelcontextprotocol.io

- OpenAI — Assistants/GPTs announcements (2023–2024). Examples of bounded, tool‑using “agents” in production: https://openai.com